- 413

- 4 673 985

Artificial Intelligence - All in One

Canada

Приєднався 24 бер 2016

Hey guys! In this channel, you will find contents related to Artificial Intelligence (AI), Deep Learning (DL), Machine Learning (DL), Natural Language Processing (NLP), Computer Vision (CV), and special topics related to data science. Please make sure to smash the LIKE button and SUBSCRIBE to our channel to learn more about these trending topics, and don’t forget to TURN ON your UA-cam notifications!

Thanks 🙂

Thanks 🙂

Lecture 39 — Recommender Systems Content based Filtering -- Part 2 | UIUC

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field.

Check out the following interesting papers. Happy learning!

Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Prediction"

Paper: aclanthology.org/2023.findings-eacl.125/

Dataset: huggingface.co/datasets/tafseer-nayeem/review_helpfulness_prediction

Paper Title: "Abstractive Unsupervised Multi-Document Summarization using Paraphrastic Sentence Fusion"

Paper: aclanthology.org/C18-1102/

Paper Title: "Extract with Order for Coherent Multi-Document Summarization"

Paper: aclanthology.org/W17-2407.pdf

Paper Title: "Paraphrastic Fusion for Abstractive Multi-Sentence Compression Generation"

Paper: dl.acm.org/doi/abs/10.1145/3132847.3133106

Paper Title: "Neural Diverse Abstractive Sentence Compression Generation"

Paper: link.springer.com/chapter/10.1007/978-3-030-15719-7_14

Check out the following interesting papers. Happy learning!

Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Prediction"

Paper: aclanthology.org/2023.findings-eacl.125/

Dataset: huggingface.co/datasets/tafseer-nayeem/review_helpfulness_prediction

Paper Title: "Abstractive Unsupervised Multi-Document Summarization using Paraphrastic Sentence Fusion"

Paper: aclanthology.org/C18-1102/

Paper Title: "Extract with Order for Coherent Multi-Document Summarization"

Paper: aclanthology.org/W17-2407.pdf

Paper Title: "Paraphrastic Fusion for Abstractive Multi-Sentence Compression Generation"

Paper: dl.acm.org/doi/abs/10.1145/3132847.3133106

Paper Title: "Neural Diverse Abstractive Sentence Compression Generation"

Paper: link.springer.com/chapter/10.1007/978-3-030-15719-7_14

Переглядів: 3 664

Відео

Lecture 38 - Recommender Systems Content based Filtering -- Part 1 | UIUC

Переглядів 7 тис.6 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

Lecture 37 - Future of Web Search | UIUC

Переглядів 8846 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

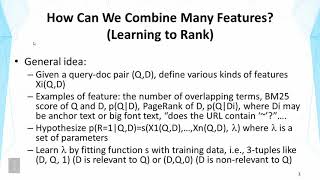

Lecture 36 - Learning to Rank -- Part 3 | UIUC

Переглядів 9146 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

Lecture 35 - Learning to Rank -- Part 2 | UIUC

Переглядів 1,3 тис.6 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

Lecture 34 - Learning to Rank -- Part 1 | UIUC

Переглядів 2,9 тис.6 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

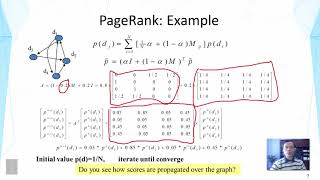

Lecture 33 - Link Analysis -- Part 3 | UIUC

Переглядів 8256 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

Lecture 32 - Link Analysis -- Part 2 | UIUC

Переглядів 1,1 тис.6 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

Lecture 31 - Link Analysis -- Part 1 | UIUC

Переглядів 1,3 тис.6 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

Lecture 30 - Web Indexing | UIUC

Переглядів 1,3 тис.6 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

Lecture 29 - Web Search Introduction & Web Crawler | UIUC

Переглядів 8 тис.6 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

Lecture 28 - Feedback in Text Retrieval Feedback in LM | UIUC

Переглядів 9726 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

Lecture 27 - Feedback in Vector Space Model | UIUC

Переглядів 3,4 тис.6 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

Lecture 40 - Recommender Systems Collaborative Filtering -- Part 1 | UIUC

Переглядів 1,9 тис.6 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

Lecture 41 - Recommender Systems Collaborative Filtering -- Part 2 | UIUC

Переглядів 1,4 тис.6 років тому

🔔 Stay Connected! Get the latest insights on Artificial Intelligence (AI) 🧠, Natural Language Processing (NLP) 📝, and Large Language Models (LLMs) 🤖. Follow ( mtnayeem) on Twitter 🐦 for real-time updates, news, and discussions in the field. Check out the following interesting papers. Happy learning! Paper Title: "On the Role of Reviewer Expertise in Temporal Review Helpfulness Predic...

Lecture 42 - Recommender Systems Collaborative Filtering -- Part 3

Переглядів 9656 років тому

Lecture 42 - Recommender Systems Collaborative Filtering Part 3

Lecture 26 - Feedback in Text Retrieval | UIUC

Переглядів 1 тис.6 років тому

Lecture 26 - Feedback in Text Retrieval | UIUC

Lecture 12 - System Implementation Fast Search | UIUC

Переглядів 1,1 тис.6 років тому

Lecture 12 - System Implementation Fast Search | UIUC

Lecture 13 - Evaluation of TR Systems | UIUC

Переглядів 1,4 тис.6 років тому

Lecture 13 - Evaluation of TR Systems | UIUC

Lecture 14 - Evaluation of TR Systems Basic Measures | UIUC

Переглядів 1,2 тис.6 років тому

Lecture 14 - Evaluation of TR Systems Basic Measures | UIUC

Lecture 15 -Evaluation of TR Systems Evaluating Ranked Lists -- Part 1 | UIUC

Переглядів 1,2 тис.6 років тому

Lecture 15 -Evaluation of TR Systems Evaluating Ranked Lists Part 1 | UIUC

Lecture 16 - Evaluation of TR Systems Evaluating Ranked Lists -- Part 2 | UIUC

Переглядів 1,4 тис.6 років тому

Lecture 16 - Evaluation of TR Systems Evaluating Ranked Lists Part 2 | UIUC

Lecture 17 - Evaluation of TR Systems Multi Level Judgements | UIUC

Переглядів 9576 років тому

Lecture 17 - Evaluation of TR Systems Multi Level Judgements | UIUC

Lecture 18 - Evaluation of TR Systems Practical Issues | UIUC

Переглядів 9016 років тому

Lecture 18 - Evaluation of TR Systems Practical Issues | UIUC

Lecture 19 - Probabilistic Retrieval Model Basic Idea | UIUC

Переглядів 20 тис.6 років тому

Lecture 19 - Probabilistic Retrieval Model Basic Idea | UIUC

Lecture 20 - Statistical Language Models | UIUC

Переглядів 3,6 тис.6 років тому

Lecture 20 - Statistical Language Models | UIUC

Lecture 21 - Query Likelihood Retrieval Function | UIUC

Переглядів 3,9 тис.6 років тому

Lecture 21 - Query Likelihood Retrieval Function | UIUC

Lecture 22 - Smoothing of Language Model -- Part 1 | UIUC

Переглядів 2 тис.6 років тому

Lecture 22 - Smoothing of Language Model Part 1 | UIUC

Lecture 23 - Smoothing of Language Model -- Part 2 | UIUC

Переглядів 9676 років тому

Lecture 23 - Smoothing of Language Model Part 2 | UIUC

This was great to watch! Thank you and God bless!

missed u , prev prof was horrible

this video is the best to SVD, the best, the best!!!

for the last slide, you should impose the condition in the minimization that the sum of y is 0. otherwise the constant vector of all 1's is a trivial soln (corresponding to lambda_1)

such great presentation with great insights. so we can interpret a vector as a scalar function on the vertex set. multiplication with the adj matrix can be seen as averaging out this function wrt to its neighbors. so in a way, this is like the linear-algebraic version of the heat equation

this smoothing interpretation makes the normalized laplacian the undisputed discrete counterpart to the riemannian laplacian. in short, the C0 story of the laplacian is measuring the difference between the function value and its average (this is an adaptation of harmonic functions satisfying the mean-value-property). therefore, the discrete analog of this story is to consider I - AD^{-1}, where I is the id matrix, A is the adj matrix, and D is the diagonal matrix.

best explanation of PCA 🔥🔥

this is too boring and the more sad part is that it comes from Stanford uni

Thank you for this video, it has been very helpful

Turkish subtitles please ❤

我谢谢你啊

I LOVE JURE!

very clear and straight forward, thank you for making this video

Insightful!!

At 4:25 I don't understand why there is only one block of size 8. It looks like there should be two of 8 and the block of size 16 should be shifted by 8 (and then the same reasoning could apply). However he computes everything as if it was ok, am I missing something?

When a user does not learn mic placement 101 when recording video, a bad user experience will be the result. Isn't it ironic

So what's the difference between negative loss likelihood and cross-entropy?

Great introduction.

Very well explained, thank you so much!

Hes genius

They are saying that the decomposition is unique, but this isn't true.

good !

5:40 those are even human can't understand.

I never really understood the concept behind SVD until now. The example in the first minute made everything click!

Helped me totally grok! Thanks so much prof.

Great lessons, thank you Stanford.

I wonder if liquid/ODE echo state nets could model high-dimensional systems better

This is legendary introduction of RMSProp to wider world, paper was only written after this. Interesting enough almost on the next slide where Geoffrey recommends algorithms to pick he suggests RMSProp with Momentum which is indeed the principle of Adam - current industry standard.

Thanks. I was able to understand the concept of SVD. Very clean explained

How do I calculate that fiedler vector?

when exactly we passed from x being just a vector to supposing that x is an eigenvector? Thanks in advance

@5:35, Please correct your statement. SVD is applicable for both Real and Complex matrices.

Prof is excellent in delivering essential pieces, I find this very informative

How you describe this study should realistically prove the corrupt person's in authority > though what should correlate are those people that are intent on totally abusing all our fundamental rights which includes the corrupt Malta government staff, lawfirms, Notaries, Police, judges who use Bogus Court Claims to steal all our assets

And when you don't want to be connected, but are being intellectually trafficked, through frequencies you help promote? like myself, at this time? You don't care, until you are held a silent hostage, ignored, trafficked, silenced.

the notation for factoring R as Q and P can be made better as follows : Q ----> matrix of shape (k x m) where m = no. of movies and k = latent vector dimensions P -----> matrix of shape (k x n) where n = no. of users and k = latent vector dimensions R ~ transpose(Q)*P this gives a nice view as the latent vectors are all packed as columns in both matrices P and Q

indians are the best teachers

Thank you for your clear explanation and great effort sir. And what is evaluation techniques to evaluate these content based recommendation systems sir, Could you please answer it will be more helpful sir

Thank you very much!!!

Godfather of AI ❤🙏

Hinton is one of the greats in machine learning so how can he mess up the indices this badly?

Amazing video. All clear now.

I would love to see a little explanation of the complexity that this creates, and how it is better than pairwise comparison. How is e.g. the pairwise comparison of band hashes avoided in this circumstance?

Is the equation at the slide 22 correct? I mean, the SVD there is not the approximation, right? The optimal error equation only makes sense when applied over the truncated SVD approximation. Otherwise, that subtraction would be equal zero.

bla bla bla ¨!!!!

He was in the wave earlier than gpt

great video, very informative, thanks professor!

Thanks to Dragomir. I've finally managed to watch all the videos. RIP, Drago...

this part is really difficult to understand for me.

Each training case is defined by an input vector, and using this input vector as the normal, we get a plane. All the "correct" weight vectors lie in one side of the plane. All vectors on the other side of the plane are all "wrong", because their dot products with the input vector are all negative (or all positive). Dot product is the weighted sum which is the output of the function.

@@JohnTheHumbleMan I don't believe this is correct but feel free to push back and teach me something. I believe what Hinton is alluding to, perhaps not very clearly, is that the training cases are helping to define this separation boundary -- this hyperplane. And he sort of makes his explanation a little too hand-wavy and fuzzy by saying "we can think of every training case as a hyperplane " what he really means is that *the collection of all of the training cases taken together* define a hyperplane (a decision-boundary for the neural network's classification purposes to tell you if something is A or B). It looks like since this entire process is a means to an end of finding this hyperplane/decision boundary he is glossing over the details with his statement "we can think of every training case as a hyperplane". By his own definition a training case is just a vector and by the definition of a plane -- you need two vectors to form it. So what is the second vector that forms this training plane? it isn't anything -- there is no plane formed by each individual training case, this is just a poor explanation. It is just a case of Hinton focusing on the point of this training and abstracting away details while plebs like us who are trying to understand the details are looking at the explanation very close and getting confused

Hueta

Best explanation ever! Simple yet comprehensive.